Image credit: Cnet

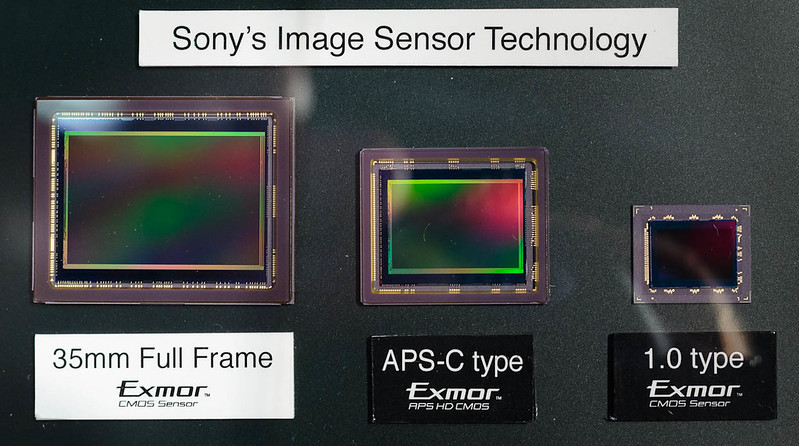

I’m sure you’ve all seen this Sony sensor size comparison chart at various fairs, on various sites, or in the simulated display (in which no sensors were harmed in the making of) at their various retail outlets. The implication, of course, is that bigger is better; look how much bigger a sensor you can get from us! This is of course true: all other things being equal, the more light you can collect, the more information is recorded, and the better the image you’ll be able to output for a given field of view. However, I’m going to make a few predictions today about the way future digital sensor development is going to go – and with it, the development of the camera itself. Revisit this page in about five years; in the meantime, go back to making images after reading…

Differential size comparisons: small MF vs big M4/3.

1. Underlying sensor technology is converging.

Larger sensors used to be CCDs; smaller ones were CMOS. Now they’re all CMOS, and they’re slowly all moving towards putting the supporting circuitry on the rear so as to maximise light collection ability – the so called ‘BSI’ architecture. They’re all not just sporting microns arrays, but microns arrays and filter stacks that are designed into being part of the overall optical formula so that both resolving power and light collection are maximised. Look closely into the white papers that get put out with every subsequent generation, and you’ll find that the same feature set that’s in the larger sensors is also in the smaller ones – and vice versa. The upshot is that the inequality in some areas of performance that previously existed (e.g. small sensor high ISO performance being superior to MF because of the generational gap) will pretty much be eliminated.

2. The monopoly will want to maximise efficiency.

I suspect part of the reasons we’re finally seeing this convergence is that the underlying designs are more scalable; not only does this simplify production and maximise the R&D dollar (especially since camera sales have been shrinking over the last few years) – it means that you can offer the same improvements to a much larger potential range of customers. Semiconductor fabrication is an expensive business: it’s both highly capital intensive because of the required production hardware, but also requires a high degree of supporting infrastructure and expertise. Not many companies can afford to do this, and with Sony slowly cleaning up the board – you can bet that the squeeze to increase profitability is going to start very, very soon. Using one underlying pixel-level architecture and scaling is one way to do this.

3. Sensor size is likely to once again be directly proportional to raw output, processing aside.

Here’s what I think will happen in the long run: we’ll have one or two pixel sizes, and simply fill those across whatever overall area is desired. At the pixel level, readout and processing limitations aside, I think performance will be identical. More light collection area over the same angle of view will once again mean both more spatial and luminance information at finer gradations. In other words: in a way, digital will be like film again. For any given area of film, you can expect a certain amount of resolution since the underlying emulsion is the same. Thus, more performance requires a larger format – and the same will be true of digital sensors.

However, the differentiators in final performance are likely to come from various technologies that can’t be implemented on all sensor sizes, or that have bottlenecks/limitations common to all sizes (e.g. maximum data processing rates etc.). For example, the magnetic sensor suspension used on M4/3 cameras is significantly more effective than on FF; this has to do with the mass that must be moved/accelerated and associated power consumption, plus increase in angular resolution requiring finer control at the same time. We might see optical IS on medium format – Pentax has already been doing it, though I find IS results in general are somewhat hit and miss beyond a certain resolution – but to suspend a 54x40mm sensor plus mount and ancillaries just isn’t going to happen with the same efficiency as a M4/3 one. Similarly, whilst we may reach extremely high data read rates – the E-M1.2 can already manage 60fps at 20MP, which means 1200MP/s of data being read, processed and stored – the bottlenecks are likely to be common across all cameras. This is not an exact comparison, but scaling the same pixel density to 54×40 yields a 202MP sensor, and 1/10th the frame rate. That extra data may well be processed in creative ways (pixel shift, noise averaging, etc.) to make up the single capture gap – more on this later. I wouldn’t be surprised if in practice, under less than ideal conditions, the gap between large and small sensors is far smaller (and less linear) than the numbers themselves would suggest.

4. Unconventional sensor layouts are unlikely to become mainstream.

Whilst the various Foveon, X-trans etc. options have the potential to extract more performance in various ways than an equivalent Bayer sensor, there are few things that will eventually land up limiting their potential. Firstly, simple economics: the performance differential simply isn’t big enough to support the necessary R&D required to develop those alternative sensor architectures to the same level, which means not enough cameras get sold, etc. Even though the Foveon designs may excel in color and spatial resolution over their Bayer counterparts, the tradeoffs of high ISO performance and speed have proven not really acceptable to consumers. X-trans has fewer tradeoffs, but until recently, post processing workflow has not been ideal, and significant processing happens between sensor and even camera JPEG output – leading to raw files that actually have much less latitude than you’d expect (especially in shadow recovery). The only thing we’re likely to see is some form of pixel binning in the case massive output sizes are not required, since that can still use underlying Bayer architecture.

5. Computational photography will provide the Next Big Leap.

We’ve long ago passed sufficiency at high levels; we’ve passed them at the middle price point, and we’ve now passed it at the consumer level, too. Even taking state of the art displays into account like those high-density 4K smartphones and iPads (didn’t I predict display media would be the next advance many years ago with Ultraprinting?) – we still have enough pixels to go around. The consumer world at least now appreciates why more information density looks better – even if it doesn’t precisely understand why. However, physical limits to our vision mean that we may not need much more information for the majority of uses simply because we lack the ability to absorb it all. What we can and do appreciate, however, is hardware that gets us to the same point – or provides more options like cropping to simulate longer lenses, or pixel binning in very low light – without the current weight penalties.

Whilst companies like Lytro and Light have tried, with somewhat mixed results, I’d say that the approach simply hasn’t been consumer-friendly. Beyond the extremely passionate, the technical execution under the hood does not matter; only the ease of use of the chose implementation and the results. Trying to force-fit the capabilities of the new technology to the existing photographic framework doesn’t make sense, either; we may well need to come to accept a much simpler terminology, at least at the consumer end – e.g. ‘background: more blur, less blur’ and a slider. Even for the serious, I’ve come to realise I don’t really care what the numbers say so long as a) I can get the exposure I want, and b) the visual look I want. If sliders simplify the UI, and the rest of the information is available on demand if you nee to calculate flash power etc. – then what’s wrong with that? The proof is the number of serious, knowledgeable photographers who do just fine with an iPhone (myself included) and have no clue what the exposure parameters actually are, other than we can focus and meter on what we want, and make the capture brighter or darker.

I actually feel encouraged by this: less focus on the how, more focus on the why, and the image. It’s the modern photographic revolution, redux: instead of showing us the source code and making the boxes available to all, it’s the Apple-ization and film point and shoot rejuvenation of photography. I’m about as far from being a hipster as you can get, and don’t like Instax, but I suspect that this will actually stick, because we’ll be spending more brains power on making images and less on buying gear. And that, is a Very Good Thing. MT

__________________

Visit the Teaching Store to up your photographic game – including workshop and Photoshop Workflow videos and the customized Email School of Photography. You can also support the site by purchasing from B&H and Amazon – thanks!

We are also on Facebook and there is a curated reader Flickr pool.

Images and content copyright Ming Thein | mingthein.com 2012 onwards. All rights reserved

Oh, Ming, now you’ve done it, called the guild on its own predelictions. Computational photography is changing the way we take pictures, and for the better. There is a better way of taking shots than with the photographic equivalent of a synchroless stick shift car, but it precludes one from all the expertise one builds up over the years and eventually entombs. At CP+ Sony just introduced a 1000fps 20MP 3-layer phone sensor module that enables 120fps picture taking with near-zero jello effect. Similar to what’s in the RX10III and A6500, if I’m not mistaken. And Eric Fossum is pushing towards making a quantum leap in imaging versatility with the QIS. At some point we may figure out a less lossy color filtration scheme than Bayer, which will give a few more photons a change to be converted, but in the meantime the software folks will be delivering staggering and staggeringly useful capabilities to things that look more and more like a smartphone.

I tend to agree that you will see a building block approach to sensor production in the future. While I’m not sure that I agree with you that the block will be a certain size pixel (much of the design of a sensor lies in the minimization of variance of performance across the chip), certainly the architecture is now common across pretty much all sensor sizes.

As my DSLR gathers more dust, and I find myself more in the moment using my smartphone and standing amazed at what Hasselblad pulled off with the X1D, a future of smartphones with grips festooned with essential controls and multiple lens/sensor modules doing things unimaginable a few years ago sounds great. Perfect timing for that higher energy density battery technology…

Yet with all this, fundamentally, the question of how to control it simply, have the technology work for us and maximise flexibility has yet to be really well addressed…the iPhone UI perhaps does it best, but the current ‘best’ of the bunch dedicated cameras are woefully over complex in comparison. I’ve grown to like the E-M1.2 very much, but oh god – the menus!

Very interesting read. Many thanks.

To me, the sensor market will split into area: the one for “traditional” cameras where the described outlook seems very plausible.

The other one is for the market for smartphones and industrial applications like self-driving cars. I assume that is were the volume and money will be and consequently technical advances. For example, I expect curves sensors to show up in phones first, especially if they would allow e.g. slim tele lenses in the phones.

It’s also much easier to make a small curved sensor than say, a 645-sized one 🙂

Lots to chew on in this thread. My general thoughts regarding this thread are as follows –

1) We are clearly at the point of diminishing returns when it comes to sensor performance.

2) Most people don’t print so why do they even worry about better capture technology.

3) We are too focused on technology and not enough on making meaningful images – would resolution have helped the great photographers 20th century.

4) Image manipulation has been with us since the beginning but the tools today make it easier – Ansel Adams and Jerry Uelsmann are examples. Don’t forget photographers have always manipulated the subject before capture. That’s often more misleading than what is done afterwards.

5) There is no objective media or photojournalist – it’s always been easy to distort the facts with words and now it’s easy with images.

6) The art form of photography has been evolving for a long time but now it’s accelerating. When you look at most MFA programs in photography they teach multi-media. Photography is often taught as another arrow in the quiver for artist to use to express themselves.

To point 2, better dynamic range is still visible in small digital-only output – not just printing.

3. Agreed, and I’ve been trying to say this for a long time – only to be conveniently ignored 🙂

Enjoyable forcasts and discussion. As Robin Williams once said “Reality – what a concept”. When post processing, I often ask myself questions like:

– is this faithful to my memory of the scene?

– is this how I just prefer the image to look?

– is this how I think my facebook friends would orefer to see the image?

– is removing distractions from the scene ok for this image or does it degrade context or is it deceptive

– cropping – all of the above questions plus a few more

I do not have ready answers to these questions but I think it is important to ask them. Of course context, artistic intent, and use of the image (art vs photojournalism) impact the final decisions.

i confess that while I myself don’t like over glamorized people shots, I’ve done it a few times and people just loved it. Afterwards, I felt bad about it though and I’m unlikely to repeat. I don’t need the applause, and life is too short.

Absolutely. These aren’t easy questions to answer because of subjective and perceptual relativity; what’s normal for you might be dull for somebody else, and vice versa. Or worse, relate to situations we don’t have any personal experience with, which makes it even harder to judge ‘normal’.

Hi Ming

I read about something like “nano dot quantum” sensor tech (probably got the name wrong ).

The promised advantages included compressed/rolled off highlights and greater tolerance to non telecentric lenses. Not sure if anything will come of it though.

There maybe other tech that could provide actual photographic advantages other than just pixel numbers. Let’s live in hope.

Regards

I sa the patent filing too, but it was a bit vague on the implementation front – I suppose we’ll know more as technology matures…

It is all about the look of the camera and lens and what is trendy. Marketing has played a huge influence in photography and is neglected in this otherwise very informative essay.

In general, the look of the camera and style is more of a driving force than necessary performance. You mention, rightfuly so, the performance of the fevoen sensors but look at the recent cameras they have put out that get very little interest. Why? because they are hideous. If Sigma produced a camera that looked like a X Pro it would have been much better received.

My guess is this is why Canon and Nikon have been slow to progress. Same with Leica.

Sony has been kicking but because their technological advancements are housed in attractive cameras and lenses. Just look at Samsung, a very advanced technology company that imitated the same old same old with an ugly product. Now they are out of the camera market.

To tie in the article, with performance narrowing, marketing is all that matters. This is why the HB X1D is going to sell like hotcakes. Everyone loves it before they have seen sufficient imagery.

First post but thought it was worth my 2 cents.

Interesting counterpoint. Would you select a camera based on looks first? What if ergonomics are terrible, like the Sony cameras? Or if the controls fail to take into account the requirements of digital photography (as opposed to film), like the Leicas? Surely this makes no sense, otherwise we’d all be using horse-drawn carriages because they look better…

I know plenty of people – pros and amateurs alike – who prioritise performance and ergonomics far above the way a device looks…they’re tools, not posing accessories (at least they are if your intention is to be a photographer 🙂 )

Hi Ming,

It depends on how far you take it. As a young chap starting out in photography I picked my first camera with zero knowledge about photography or Cameras’s, it was a Canon EOS 50e based on how it looked and felt, I thought it looked great at the time. By the time digital had arrived I was into the canon system and it felt natural to move into the canon DSLR ecosystem. Despite a few occasions where I considered moving to Nikon I resisted based primarily on my preference for the canon lens line up (tilt shifts). However I would be lying if I said that camera aesthetics didn’t have a role to play, put simply I prefer the way canons look over Nikon, Sony et al. My main profession is in design and while I am fairly knowledgeable about photography equipment now and a reasonably good photographer (perhaps technically more than the finer elements of compositions, but I strive to improve) I can’t help being led a bit by my own design taste. I just don’t think I could bring myself to buy a camera that’s ugly however well it performs. I think camera aesthetics and how marketing companies push these are still very significant in this industry, I don’t think people like to admit to this though, but I think at some level it affects us all.

Ben

I can see ergonomics affecting aesthetics, but not picking something pretty over something functional – I believe there’s such a thing as functional beauty, too (e.g. fan blades on an aircraft engine). Most cameras tend to fall into the latter category anyway; few are truly ugly and few are truly beautiful. There’s also a reason why I don’t comment on this in reviews, it’s simply too subjective 🙂

Just curious: by controls like on the Leicas I guess you mean the shutter and aperture dials? What requirements of digital photography are not taken into account with such controls? They make the PASM mode dial redundant (at least when the aperture is controlled electronically like on the Fujifilms)? The only drawbacks I can think of in terms of usability are the full stop increments on the shutter speed dial and the need of two handed operation.

Exposure compensation on an M body is clunky at best, impossible at worst (earlier Ms). The grips are not ergonomic and tend to twist out of your hands without auxiliary support (front grip, thumbsup) which shouldn’t be necessary on a camera at that price – the proliferation of these accessories says that it’s a real problem. On top of that, let’s not go into how archaic the whole RF system is for critical and consistent focus, not to mention increasingly impractical with longer lenses both for accuracy of framing and focus since your magnification decreases…

I agree on everything, but all of this also applies to film photography, sometimes to a lesser but still significant extent. So it’s not the classical controls that are the culprit per se, but not exploiting the advantages that digital photography has to offer in terms of focusing and metering. I find Fujifilm’s approach to be almost perfect in this regard.

Yes, there are negatives to the rangefinder cameras, but also clear positives, especially if you choose the camera precisely because of its strengths. An environmental portrait/reportage photographer who likes to shoot between 28-50 mm may not have any interest in telephoto lenses for sports/nature etc or even more extreme wideangles, at least not so often. I think this is often forgotten when more generalist photographers, who want an all purpose camera fro macro to 600mm bird lenses, think about leica ms.

The rangefinder m cameras allow quick lens prefocus by feel of the tab position, faster than any autofocus. They allow previsualiation through the rangefinder without the shallow dof of ttl focusing, useful and much clearer for complex scenes of moving individuals, and they allow the user to see things coming in to view from outside the frame. There is also no scene blackout when the image is taken. The rangefinder is also very accurate for quick manual focus – I’ve hardly ever missed focus with a 50/1.4 even on prints blown up to 36×24. All of the attributes can be real strengths in a film or digital body – at least up to 24mp.

I’m well aware of this because I used nothing but Leica M for a couple of years. You can’t frame or focus accurately with anything over 75mm – which isn’t a birding lens. It’s barely even a portrait lens.

The thing I always wonder about sensor technology is if Bayer is actually better than alternative technologies or if it has just had more time to mature properly.

Bayer sensors have had the better part of two decades in the mainstream, lots of manufacturers, and just plain momentum behind them to propel them to the level they are currently. X-trans is only on the 2nd or 3rd generation, and Foveon is older but somewhere similar. Remember what even the better Bayer sensors were like in, say, 2005?

Also, tying alternative sensor designs to one manufacturer doesn’t give us a full picture of how well the design can actually perform. Wouldn’t it be nice if we could have a real uncooked RAW from an X-Trans sensor? Or if we had a manufacturer with less of a track record of weirdness than Sigma producing stacked sensors? It really is monopoly out there in the sensor manufacturing world.

I wonder, if allowed to mature over time, these alternative technologies will surpass what we are used to from the current generation of Bayer sensors. But I also wonder if they will ever be allowed to fully mature, considering that Bayer sensors are more then good enough at this point.

I did a little foray into X-Trans a few years back and was shocked buy how the images just weren’t as “nice” as what I was used to with Bayer for a variety of reasons. X-Trans output gets better and better with each generation of software but they still lag behind; I assume because it’s newer technology and hasn’t had the time to mature on the processing side. (Regardless, I think the X-Trans design is bit of an anachronism considering that pixel pitch has gotten so small that moire and AA filters are becoming a thing of the past. Cool Idea though…)

Either way, I’m happy with my full frame Nikons. I have never really felt the itch to try something else. We’re way past the point what we need to make great images, regardless of sensor design.

Good question: it’s not a fair comparison by any means. Bayer is mature, but not necessarily a better end point – though we’ll never know, because some improvements (e.g. smaller fab process) are equally applicable to both Foveon and Bayer (or X Trans).

Richard,

After many hours of self-directed research and overturning quite a few stones, I can only come to the conclusion that unique benefits from various sensor forms are derived from proprietary demosaicing algorithms which the camera manufacturer does not release to outside vendors (Adobe, Phase One, DxO, etc.). Fuji (and Nikon) appear to be among the worst offenders in this aspect.

An ideal future I feel should be one of disclosed debayering/demosaicing methods, regardless of sensor design.

Yes… There’s a layer to raw processing that isn’t quite raw. My personal X-Trans vs Bayer experiments showed me how much that “under the hood” stuff (debayering, demosaicing, etc.,) really does make a difference, and we don’t really have any control over it in an ACR/Lightroom environment. I’ve tried some of those really raw raw processors and they’re just too clunky to get consistently good results in the real world. Those software packages show the difference between mature processing algorithms (Adobe, I’m assuming Capture one) and the less mature ones (the third party stuff). No matter how much I wrestled with the other demosaicing/debayering algorithms, the Adobe stuff just looked better more consistently.

Don’t even get me started on the whole cooked raw thing (I’m looking at you, Sony and Fuji…)

It would be nice if everything was standard, like if Lightroom/ACR could apply demosaicing, sharpening, etc. custom to each sensor to give some kind of standard result and we could tweak to taste. I think it already does this with noise reduction at different ISOs.

But again, I’m happy with the results I get and am not really looking to improve that much. My workflow is quick and consistent. There may be something better out there but I can’t see it being worth the trouble.

“…the Adobe stuff just looked better more consistently.”

I’m going to add to that: across a wider range of cameras. This is very important if you need a variety of tools for a job, which I frequently do…

I doubt we’ll see a totally uncooked standard anytime soon: the problem with this is then basically every camera with the same sensor has little else to differentiate it other than optics and ergonomics, and some manufacturers might actually have some work to do to stay in business! 🙂

There was quite a furore some years back when Nikon refused to release the color data for the D3X, I recall…

I nevertheless still believe that sensortech improvements will flow into smaller cameras much more quickly. APSC cameras are upgraded nearly every year. Due to scale of production changing the design every year is possible. As I’m most acquainted with Pentax cameras most observations are from here: In APSC the sensor gets improved every other year. In the years in between AF and other usability aspects are adressed.

Medium format cameras have a much longer production cycle (of around 4 years). So after 2 years the sensor most likely will not have the latest technology any more. Not to speak of the other features like AF-precission etc. which don’t get upgraded on a yearly basis either.

Matter of fact the autofocus capability of the 645z is technology of the the K3 and has been eclipsed by the K1 without the 645z getting an upgrade in the meantime.

To me for “getting an image” things like AF precision are becoming equally or even more important than sensor tech.

I agree that the ancillary supporting systems are just as important, especially as sensors increase in capability and loose tolerances elsewhere become more of a problem. But I’d also argue that as much as APSC has improved, it’s still not on par at the pixel level with the 645Z, let alone the new 100MP cameras. Remember: the consumer cameras have to be designed to a cost; MF generally doesn’t.

Aside from the sensor tech that probably has reached a certain limit (A milestone), don’t you think that more R&D should be focus on developing better/revolutionary optics ? Canon’s diffractive optics maybe are the best next thing ?

DO lenses are great size-wise, but do have some strange artefacts that can land up being very distracting; as armiali pointed out in another comment, sensor tech isn’t independent of optics since the two have to be complementary – how many times have we seen otherwise excellent adapted lenses fall apart on non-native formats? A good example is M4/3: the very thick filter pack compared to other cameras means that only longer adapted lenses work well, unless they are extremely telecentric.

I believe something else is coming as well — digital imaging will have less to do with what we think of as photography, it will become more like computer graphics.

If you add together all the stuff being described in the small tech and research news at sites like Dpreview or Petapixel into one gadget or one app, you will have something very different than a camera. I’m thinking about news like this:

– remove a wire fence from a picture, or remove reflections if you shoot through a window

– a cell phone app that takes several rapid shots and merges them into one picture where all your friends have their “best”, smiling faces

– translation technology integrated in your camera: take a picture in Moscow, and street signs etc. will be replaced with English

– edit the weather or time of day after the picture is taken: make a cloudy day sunny or change day to evening

– make the sky blue instead of smoggy

– move around inside the picture after it was taken: perhaps your shot would look better if you take three steps to the right

All this and more already exists at the research stage. In a few years, we might have a machine that incorporates all of this into one app or gadget. This might still be called a camera, but it will no longer behave like a camera. It will not depict reality, it will edit reality. It will show you a scene that never existed. Take your holiday pictures with a gadget like this and they will have no more connection to reality than a child’s drawing of the same holiday, a drawing where the sun always shines and everyone wears a happy face.

Ming – I would like to hear what you say about this.

I’m actually a little worried about that – I agree, it’s happening, and apps like Snapchat are the very rudimentary infancy. But I don’t think this is photography; manipulation has existed since the days of film and somehow never really went past gimmick. The one factor that may change this is the human desire to show off via social media…

Fortunately, I think there will still remain a good number of people who do want to remember and represent reality, because often – if one is a careful observer, it’s usually more rewarding and surprising than imagination, which is by definition limited to only what one has already experienced in some form. The whole point of photography is to share a perspective that perhaps hasn’t otherwise been seen before, or seen infrequently – if we take this way, then there seems to be a lot less purpose to the exercise.

Reality, “if one is a careful observer, it’s usually more rewarding and surprising than imagination”

I agree completely with this, and I do hope you are right about the future of photography. Manipulation has always been a part of the history of photography, but what I tried to describe is something entirely different.

This technology will not be limited to social media. Imagine what could be done if image manipulation of this type is applied to fake news. We will not be able to trust one single digital image in the news flow.

Years ago, a news site showed Tiger Woods fighting with his girlfriend, hot news at the time. They didn’t have any images from the scene, they didn’t even know exactly what had happened, but they felt they must have pictures, so they made a crude computer animation of what could have happened. I’m still thinking of this scene — I believe we will see much more of this soon. Today, computer graphics can be blended into cinema scenes without anyone noticing. I don’t mean dinosaurs and spaceships, I’m thinking of ordinary scenes: buildings, landscapes, people. When will this technology enter the news? Technology of this type means that no digital image contains any more information than digital images in a computer game.

I don’t think news has been objective for some time – it’s always had observational bias. Photography has been presented as yes, observationally biased too, but at least what is visible physically exists – the reality you’re suggesting is in a way reverting to a time when the newspaper illustrations were etchings…

“reverting to a time when the newspaper illustrations were etchings…”

Yeah, sort of 🙂 But at that time, anyone could thell that an etching was an etching (or wood engraving, which was very common during the late 19th century). It didn’t look like a slice of reality.

Manipulation en masse without forethought or vision, much like wanton cropping? 😛

Moreover, it seems like extraordinary profit and wealth generation will drive this “image manipulation” at ultra speed. Some of you may have seen the news report about the 20 something (?) Chinese woman who developed an app for iPhone photos that would “fix them up,” from blemishes to distortion corrections, thinning the face, and so forth, that jumped to millions of users in China. But no way to make any money with it yet. Supposedly, it went public as in IPO, and attracted something like US $400,000 in initial investment and from there to an estimated stock value somewhere between two and four billion US dollars. That’s with a “B.” And unconfirmed and not validated . . . but read on the web. We’ve seen similar purchases of image technology (apps) already in the US up in the billions of dollars. What young programer wouldn’t want to pursue some of that fame and fortune. What couldn’t Ming do with a billion dollars in capital underneath him?

Having something people want for free and something people will pay for are two very different things. I think we are actually ripe for another internet crash when investors realise there is no way to monetise some of these massively expensive bits of software other than advertising.

A billion dollars? I’d retire and spend my time taking pictures for me, and only me. Or maybe I’d have bought Hasselblad.

“Or maybe I’d have bought Hasselblad.”

Haha!

Point No. 3 already happening!

See https://techcrunch.com/2017/01/26/google-translate-now-provides-live-translation-of-japanese-text/

Great read. I have to agree since these are all on logic. Though I really wanted for the industry to move on from the CMOC.(If anybody has not shot with the Foveon at iso 100 , don’t do it , the colors depth is not something to get over easily.)

The only option is left is that manufactures get creative. The curved sensor is the most promising imo. It does not have the problem of redesigning the whole software and or changing the sensor internal hardware (that much), but redesigning the whole lenses. I am really hoping they get to it. Smaller lenses with out any correction elements (i.e. the awful Aspherics) will go far on “rendering/look” of the image.

Now back to shooting…

I agree, though the only problem is Foveon doesn’t work under all situations: if you run out of light, you may have a bit of a problem, and Bayer sensors quickly leap ahead. Curved sensors promise gains on the optical side – easier to design more highly corrected lenses, which are also smaller. But manufacturing is going to be a pain…

Good thoughts and a very nice essay, Ming. Thank you.

Pleasure!